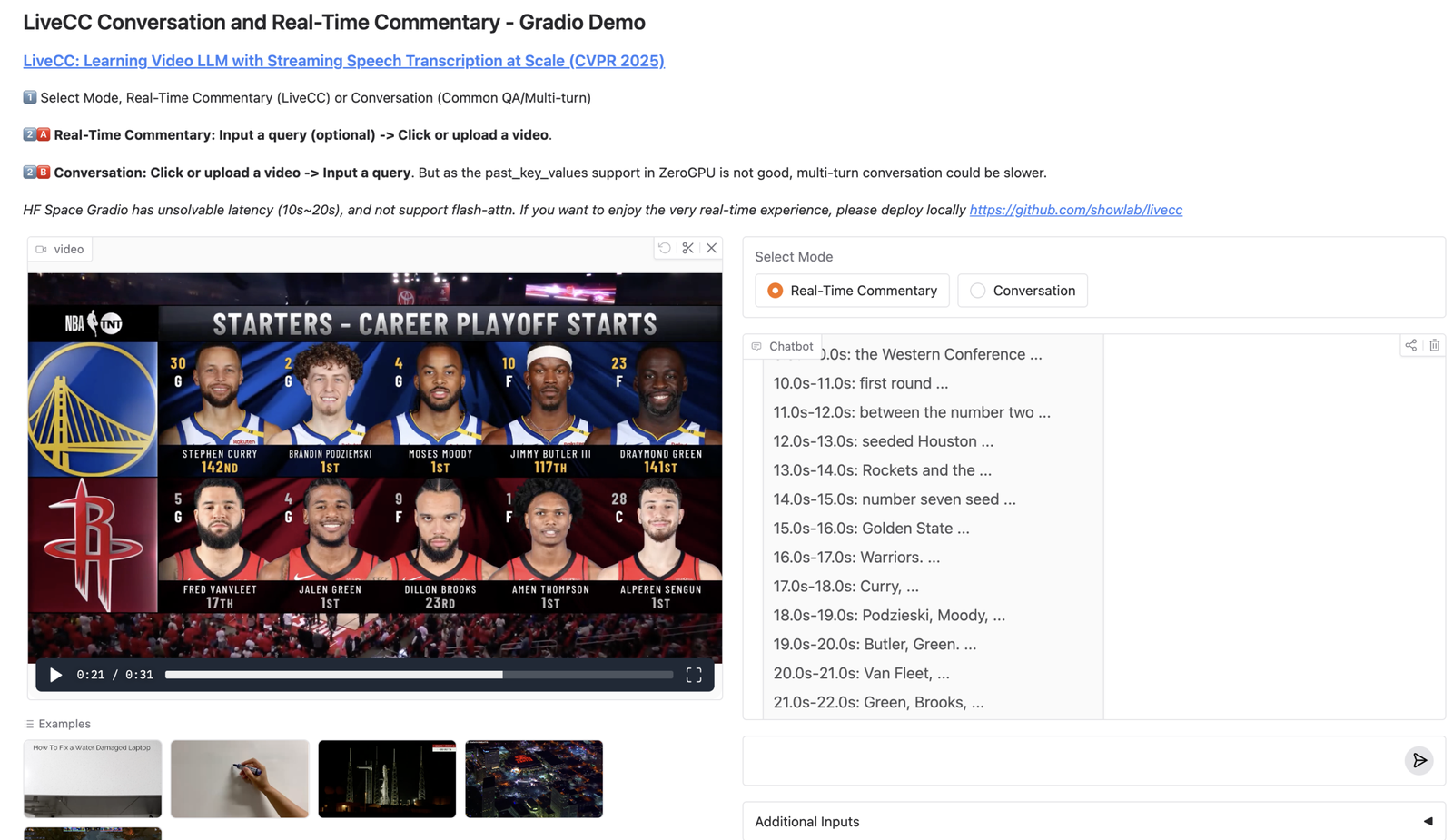

Dash data agent is an open-source self-learning data agent inspired by OpenAI’s in-house data agent. The goal is ambitious but very practical: let teams ask questions in plain English and reliably get correct, meaningful answers grounded in real business context—not just “rows from SQL.”

This post is a deep, enterprise-style guide. We’ll cover what Dash is, why text-to-SQL breaks in real organizations, Dash’s “6 layers of context”, the self-learning loop, architecture and deployment, security/permissions, and the practical playbook for adopting a data agent without breaking trust.

TL;DR

- Dash is designed to answer data questions by grounding in context + memory, not just schema.

- It uses 6 context layers (tables, business rules, known-good query patterns, docs via MCP, learnings, runtime schema introspection).

- The self-learning loop stores error patterns and fixes so the same failure doesn’t repeat.

- Enterprise value comes from reliable answers + explainability + governance (permissions, auditing, safe logging).

- Start narrow: pick 10–20 high-value questions, validate outputs, then expand coverage.

Table of Contents

- What is Dash?

- Why text-to-SQL breaks in practice

- The six layers of context (explained)

- The self-learning loop (gpu-poor continuous learning)

- Reference architecture (agent, DB, knowledge, UI)

- How to run Dash locally (Docker) + connect UI

- Enterprise use cases (detailed)

- Governance: permissions, safety, and auditing

- Evaluation: how to measure correctness and trust

- Observability for data agents

- Tools & platforms (official + GitHub links)

- FAQ

What is Dash?

Dash is a self-learning data agent that tries to solve a problem every company recognizes: data questions are easy to ask but hard to answer correctly. In mature organizations, the difficulty isn’t “writing SQL.” The difficulty is knowing what the SQL should mean—definitions, business rules, edge cases, and tribal knowledge that lives in people’s heads.

Dash’s design is simple to explain: take a question, retrieve relevant context from multiple sources, generate grounded SQL using known-good patterns, execute the query, and then interpret results in a way that produces an actual insight. When something fails, Dash tries to diagnose the error and store the fix as a “learning” so it doesn’t repeat.

Why text-to-SQL breaks in practice

Text-to-SQL demos look amazing. In production, they often fail in boring, expensive ways. Dash’s README lists several reasons, and they match real enterprise pain:

- Schemas lack meaning: tables and columns don’t explain how the business defines “active”, “revenue”, or “conversion.”

- Types are misleading: a column might be TEXT but contains numeric-like values; dates might be strings; NULLs might encode business states.

- Tribal knowledge is missing: “exclude internal users”, “ignore refunded orders”, “use approved_at not created_at.”

- No memory: the agent repeats the same mistakes because it cannot accumulate experience.

- Results lack interpretation: returning rows is not the same as answering a question.

The enterprise insight is: correctness is not a single model capability. It’s a system design. You need context retrieval, validated patterns, governance, and feedback loops.

The six layers of context (explained)

Dash grounds answers in “6 layers of context.” Think of this as the minimum viable knowledge graph a data agent needs to behave reliably.

Layer 1: Table usage (schema + relationships)

This layer captures what the schema is and how tables relate. In production, the schema alone isn’t enough—but it is the starting point for safe query generation and guardrails.

Layer 2: Human annotations (business rules)

Human annotations encode definitions and rules. For example: “Net revenue excludes refunds”, “Active user means logged in within 30 days”, “Churn is calculated at subscription_end.” This is the layer that makes answers match how leadership talks about metrics.

Layer 3: Query patterns (known-good SQL)

Query patterns are the highest ROI asset in enterprise analytics. These are SQL snippets that are known to work and are accepted by your data team. Dash uses these patterns to generate queries that are more likely to be correct than “raw LLM SQL.”

Layer 4: Institutional knowledge (docs via MCP)

In enterprises, the most important context lives in docs: dashboards, wiki pages, product specs, incident notes. Dash can optionally pull institutional knowledge via MCP (Model Context Protocol), making the agent more “organizationally aware.”

Layer 5: Learnings (error patterns + fixes)

This is the differentiator: instead of repeating mistakes, Dash stores learnings like “column X is TEXT”, “this join needs DISTINCT”, or “use approved_at not created_at.” This turns debugging effort into a reusable asset.

Layer 6: Runtime context (live schema introspection)

Enterprise schemas change. Runtime introspection lets the agent detect changes and adapt. This reduces failures caused by “schema drift” and makes the agent more resilient day-to-day.

The self-learning loop (gpu-poor continuous learning)

Dash calls its approach “gpu-poor continuous learning”: it improves without fine-tuning. Instead, it learns operationally by storing validated knowledge and automatic learnings. In enterprise terms, this is important because it avoids retraining cycles and makes improvements immediate.

In practice, your adoption loop looks like this:

Question → retrieve context → generate SQL → execute → interpret

- Success: optionally save as a validated query pattern

- Failure: diagnose → fix → store as a learningThe enterprise win is that debugging becomes cumulative. Over time, the agent becomes “trained on your reality” without needing a training pipeline.

Reference architecture

A practical production deployment for Dash (or any data agent) has four pieces: the agent API, the database connection layer, the knowledge/learnings store, and the user interface. Dash supports connecting to a web UI at os.agno.com, and can run locally via Docker.

User (Analyst/PM/Eng)

-> Web UI

-> Dash API (agent)

-> DB (Postgres/warehouse)

-> Knowledge store (tables/business rules/query patterns)

-> Learnings store (error patterns)

-> Optional: MCP connectors (docs/wiki)How to run Dash locally

Dash provides a Docker-based quick start. High level:

git clone https://github.com/agno-agi/dash.git

cd dash

cp example.env .env

docker compose up -d --build

docker exec -it dash-api python -m dash.scripts.load_data

docker exec -it dash-api python -m dash.scripts.load_knowledgeThen connect a UI client to your local Dash API (the repo suggests using os.agno.com as the UI): configure the local endpoint and connect.

Enterprise use cases (detailed)

1) Self-serve analytics for non-technical teams

Dash can reduce “data team bottlenecks” by letting PMs, Support, Sales Ops, and Leadership ask questions safely. The trick is governance: restrict which tables can be accessed, enforce approved metrics, and log queries. When done right, you get faster insights without chaos.

2) Faster incident response (data debugging)

During incidents, teams ask: “What changed?”, “Which customers are impacted?”, “Is revenue down by segment?” A data agent that knows query patterns and business rules can accelerate this, especially if it can pull institutional knowledge from docs/runbooks.

3) Metric governance and consistency

Enterprises often have “metric drift” where different teams compute the same metric differently. By centralizing human annotations and validated query patterns, Dash can become a layer that enforces consistent definitions across the organization.

4) Analyst acceleration

For analysts, Dash can act like a co-pilot: draft queries grounded in known-good patterns, suggest joins, and interpret results. This is not a replacement for analysts—it’s a speed multiplier, especially for repetitive questions.

Governance: permissions, safety, and auditing

Enterprise data agents must be governed. The minimum requirements:

- Permissions: table-level and column-level access. Never give the agent broad DB credentials.

- Query safety: restrict destructive SQL; enforce read-only access by default.

- Audit logs: log user, question, SQL, and results metadata (with redaction).

- PII handling: redact sensitive fields; set short retention for raw outputs.

This is where “enterprise-level” differs from demos. The fastest way to lose trust is a single incorrect answer or a single privacy incident.

Evaluation: how to measure correctness and trust

Don’t measure success as “the model responded.” Measure: correctness, consistency, and usefulness. A practical evaluation framework:

- SQL correctness: does it run and match expected results on golden questions?

- Metric correctness: does it follow business definitions?

- Explainability: can it cite which context layer drove the answer?

- Stability: does it produce the same answer for the same question across runs?

Observability for data agents

Data agents need observability like any production system: trace each question as a run, log which context was retrieved, track SQL execution errors, and monitor latency/cost. This is where standard LLM observability patterns (audit logs, traces, retries) directly apply.

Tools & platforms (official + GitHub links)

- Dash (GitHub): github.com/agno-agi/dash

- OpenAI article (inspiration): Inside OpenAI’s in-house data agent

- Agno OS UI: os.agno.com

FAQ

Is Dash a replacement for dbt / BI tools?

No. Dash is a question-answer interface on top of your data. BI and transformation tools are still foundational. Dash becomes most valuable when paired with strong metric definitions and curated query patterns.

How do I prevent hallucinated SQL?

Use known-good query patterns, enforce schema introspection, restrict access to approved tables, and evaluate on golden questions. Also store learnings from failures so the agent improves systematically.

A practical enterprise adoption playbook (30 days)

Data agents fail in enterprises for the same reason chatbots fail: people stop trusting them. The fastest path to trust is to start narrow, validate answers, and gradually expand the scope. Here’s a pragmatic 30-day adoption playbook for Dash or any similar data agent.

Week 1: Define scope + permissions

Pick one domain (e.g., product analytics, sales ops, support) and one dataset. Define what the agent is allowed to access: tables, views, columns, and row-level constraints. In most enterprises, the right first step is creating a read-only analytics role and exposing only curated views that already encode governance rules (e.g., masked PII).

Then define 10–20 “golden questions” that the team regularly asks. These become your evaluation set and your onboarding story. If the agent cannot answer golden questions correctly, do not expand the scope—fix context and query patterns first.

Week 2: Curate business definitions and query patterns

Most failures come from missing definitions: what counts as active, churned, refunded, or converted. Encode those as human annotations. Then add a handful of validated query patterns (known-good SQL) for your most important metrics. In practice, 20–50 patterns cover a surprising amount of day-to-day work because they compose well.

At the end of Week 2, your agent should be consistent: for the same question, it should generate similar SQL and produce similar answers. Consistency builds trust faster than cleverness.

Week 3: Add the learning loop + monitoring

Now turn failures into assets. When the agent hits a schema gotcha (TEXT vs INT, nullable behavior, time zones), store the fix as a learning. Add basic monitoring: error rate, SQL execution time, cost per question, and latency. In enterprise rollouts, monitoring is not optional—without it you can’t detect regressions or misuse.

Week 4: Expand access + establish governance

Only after you have stable answers and monitoring should you expand to more teams. Establish governance: who can add new query patterns, who approves business definitions, and how you handle sensitive questions. Create an “agent changelog” so teams know when definitions or behaviors change.

Prompting patterns that reduce hallucinations

Even with context, LLMs can still guess. The trick is to make the system ask itself: “What do I know, and what is uncertain?” Good prompting patterns for data agents include:

- Require citations to context layers: when the agent uses a business rule, it should mention which annotation/pattern drove it.

- Force intermediate planning: intent → metric definition → tables → joins → filters → final SQL.

- Use query pattern retrieval first: if a known-good pattern exists, reuse it rather than generating from scratch.

- Ask clarifying questions when ambiguity is high (e.g., “revenue” could mean gross, net, or recognized).

Enterprises prefer an agent that asks one clarifying question over an agent that confidently answers the wrong thing.

Security model (the non-negotiables)

If you deploy Dash in an enterprise, treat it like any system that touches production data. A practical security baseline:

- Read-only by default: the agent should not be able to write/update tables.

- Scoped credentials: one credential per environment; rotate regularly.

- PII minimization: expose curated views that mask PII; don’t rely on the agent to “not select” sensitive columns.

- Audit logging: store question, SQL, and metadata (who asked, when, runtime, status) with redaction.

- Retention: short retention for raw outputs; longer retention for aggregated metrics and logs.

Dash vs classic BI vs semantic layer

Dash isn’t a replacement for BI or semantic layers. Think of it as an interface and reasoning layer on top of your existing analytics stack. In a mature setup:

- dbt / transformations produce clean, modeled tables.

- Semantic layer defines metrics consistently.

- BI dashboards provide recurring visibility for known questions.

- Dash data agent handles the “long tail” of questions and accelerates exploration—while staying grounded in definitions and patterns.

More enterprise use cases (concrete)

5) Customer segmentation and cohort questions

Product and growth teams constantly ask cohort and segmentation questions (activation cohorts, retention by segment, revenue by plan). Dash becomes valuable when it can reuse validated cohort SQL patterns and only customize filters and dimensions. This reduces the risk of subtle mistakes in time windows or joins.

6) Finance and revenue reconciliation (with strict rules)

Finance questions are sensitive because wrong answers cause real business harm. The right approach is to encode strict business rules and approved query patterns, and prevent the agent from inventing formulas. In many cases, Dash can still help by retrieving the correct approved pattern and presenting an interpretation, while the SQL remains governed.

7) Support operations insights

Support leaders want answers like “Which issue category spiked this week?”, “Which release increased ticket volume?”, and “What is SLA breach rate by channel?” These questions require joining tickets, product events, and release data—exactly the kind of work where context layers and known-good patterns reduce failure rates.

Evaluation: build a golden set and run it daily

Enterprise trust is earned through repeatability. Create a golden set of questions with expected results (or expected SQL patterns). Run it daily (or on each change to knowledge). Track deltas. If the agent’s answers drift, treat it like a regression.

Also evaluate explanation quality: does the agent clearly state assumptions, definitions, and limitations? Many enterprise failures aren’t “wrong SQL”—they are wrong assumptions.

Operating Dash in production

Once deployed, you need operational discipline: backups for knowledge/learnings, a review process for new query patterns, and incident playbooks for when the agent outputs something suspicious. Treat the agent like a junior analyst: helpful, fast, but always governed.

Guardrails: what to restrict (and why)

Most enterprise teams underestimate how quickly a data agent can create risk. Even a read-only agent can leak sensitive information if it can query raw tables. A safe starting point is to expose only curated, masked views and to enforce row-level restrictions by tenant or business unit. If your company has regulated data (finance, healthcare), the agent should never touch raw PII tables.

Also restrict query complexity. Allowing the agent to run expensive cross joins or unbounded queries can overload warehouses. Guardrails like max runtime, max scanned rows, and required date filters prevent cost surprises and outages.

UI/UX: the hidden key to adoption

Even the best agent fails if users don’t know how to ask questions. Enterprise adoption improves dramatically when the UI guides the user toward well-scoped queries, shows which definitions were used, and offers a “clarify” step when ambiguity is high. A good UI makes the agent feel safe and predictable.

For example, instead of letting the user ask “revenue last month” blindly, the UI can prompt: “Gross or net revenue?” and “Which region?” This is not friction—it is governance translated into conversation.

Implementation checklist (copy/paste)

- Create curated read-only DB views (mask PII).

- Define 10–20 golden questions and expected outputs.

- Write human annotations for key metrics (active, revenue, churn).

- Add 20–50 validated query patterns and tag them by domain.

- Enable learning capture for common SQL errors and schema gotchas.

- Set query budgets: runtime limits, scan limits, mandatory date filters.

- Enable audit logging with run IDs and redaction.

- Monitor: error rate, latency, cost per question, most-used queries.

- Establish governance: who approves new patterns and definitions.

Closing thought

Dash is interesting because it treats enterprise data work like a system: context, patterns, learnings, and runtime introspection. If you treat it as a toy demo, you’ll get toy results. If you treat it as a governed analytics interface with measurable evaluation, it can meaningfully reduce time-to-insight without sacrificing trust.

Extra: how to keep answers “insightful” (not just correct)

A subtle but important point in Dash’s philosophy is that users don’t want rows—they want conclusions. In enterprises, a useful answer often includes context like: scale (how big is it), trend (is it rising or falling), comparison (how does it compare to last period or peers), and confidence (any caveats or missing data). You can standardize this as an answer template so the agent consistently produces decision-ready outputs.

This is also where knowledge and learnings help. If the agent knows the correct metric definition and the correct “comparison query pattern,” it can produce a narrative that is both correct and useful. Over time, the organization stops asking for SQL and starts asking for decisions.

One practical technique: store “explanation snippets” alongside query patterns. For example, the approved churn query pattern can carry a short explanation of how churn is defined and what is excluded. Then the agent can produce the narrative consistently and safely, even when different teams ask the same question in different words.

With that, Dash becomes more than a SQL generator. It becomes a governed analytics interface that speaks the organization’s language.

Operations: cost controls and rate limits

Enterprise deployments need predictable cost. Add guardrails: limit max query runtime, enforce date filters, and cap result sizes. On the LLM side, track token usage per question and set rate limits per user/team. The goal is to prevent one power user (or one runaway dashboard) from turning the agent into a cost incident.

Finally, implement caching for repeated questions. In many organizations, the same questions get asked repeatedly in different words. If the agent can recognize equivalence and reuse validated results, you get better latency, lower cost, and higher consistency.

Done correctly, these operational controls are invisible to end users, but they keep the agent safe, affordable, and stable at scale.

This is the difference between a demo agent and an enterprise-grade data agent.