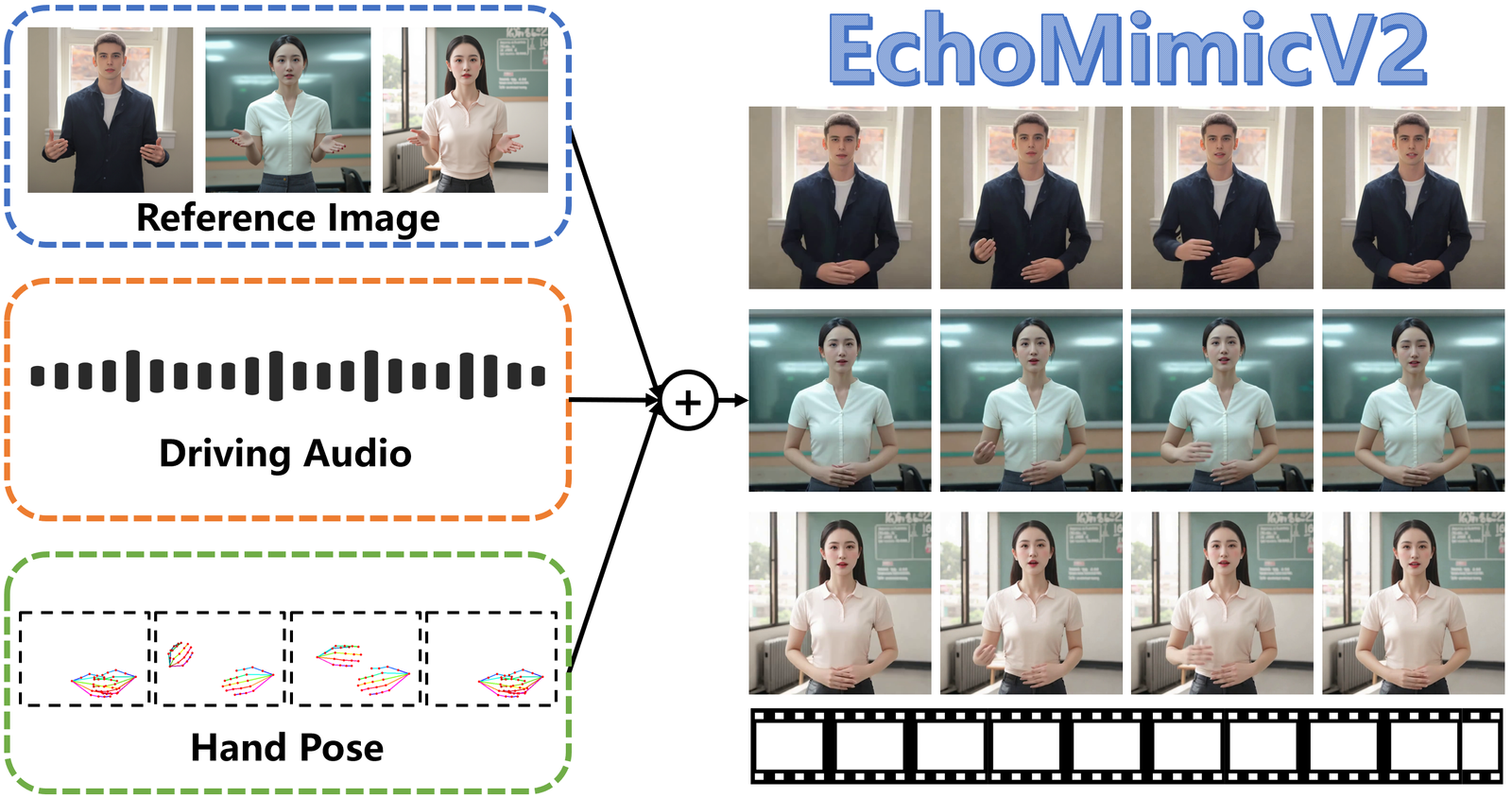

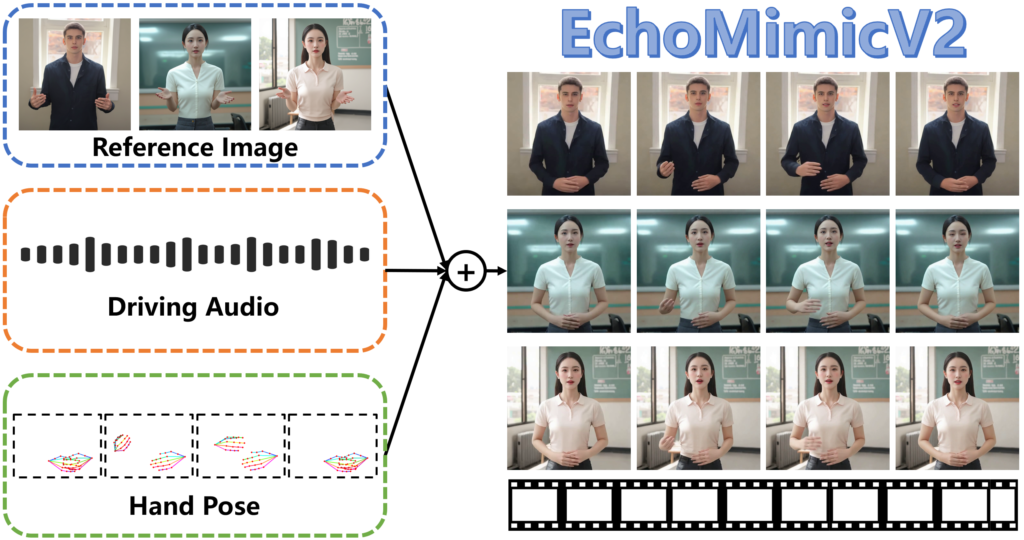

Free AI Avatar Creation Platform, Are you fascinated by the capabilities of AI avatar platforms like D-ID, HeyGen, or Akool and want to build your own for free? This post dives into the technical details of creating a free, cutting-edge AI avatar creation platform by leveraging the power of EchoMimicV2. This technology allows you to create lifelike, animated avatars using just a reference image, audio, and hand poses. Here’s your guide to building this from scratch.

EchoMimicV2: Your Free AI Avatar Creation Platform

EchoMimicV2, detailed in the research paper you provided, is a revolutionary approach to half-body human animation. It achieves impressive results with a simplified condition setup, using a novel Audio-Pose Dynamic Harmonization strategy. It smartly combines audio and pose conditions to generate expressive facial and gestural animations. This makes it an ideal foundation for building your free AI avatar creation platform. Key advantages include:

- Simplified Conditions: Unlike other methods that use cumbersome control conditions, EchoMimicV2 is designed to be efficient, making it easier to implement and customize.

- Audio-Pose Dynamic Harmonization (APDH): This strategy smartly synchronizes audio and pose, enabling lifelike animations.

- Head Partial Attention (HPA): EchoMimicV2 can seamlessly integrate headshot data to enhance facial expressions, even when full-body data is scarce.

- Phase-Specific Denoising Loss (PhD Loss): Optimizes animation quality by focusing on motion, detail, and low-level visual fidelity during specific phases of the denoising process.

Technical Setup: Getting Started with EchoMimicV2

To create your own free platform, you will need a development environment. Here’s how to set it up, covering both automated and manual options.

1. Cloning the Repository

First, clone the EchoMimicV2 repository from GitHub:

git clone https://github.com/antgroup/echomimic_v2

cd echomimic_v22. Automated Installation (Linux)

For a quick setup, especially on Linux systems, use the provided script:

sh linux_setup.shThis will handle most of the environment setup, given you have CUDA >= 11.7 and Python 3.10 pre-installed.

3. Manual Installation (Detailed)

If the automated installation doesn’t work for you, here’s how to set things up manually:

3.1. Python Environment:

- System: The system has been tested on CentOS 7.2/Ubuntu 22.04 with CUDA >= 11.7

- GPUs: Recommended GPUs are A100(80G) / RTX4090D (24G) / V100(16G)

- Python: Tested with Python versions 3.8 / 3.10 / 3.11. Python 3.10 is strongly recommended.

Create and activate a new conda environment:

conda create -n echomimic python=3.10

conda activate echomimic3.2. Install Required Packages

pip install pip -U

pip install torch==2.5.1 torchvision==0.20.1 torchaudio==2.5.1 xformers==0.0.28.post3 --index-url https://download.pytorch.org/whl/cu124

pip install torchao --index-url https://download.pytorch.org/whl/nightly/cu124

pip install -r requirements.txt

pip install --no-deps facenet_pytorch==2.6.03.3. Download FFmpeg:

Download and extract ffmpeg-static, and set the FFMPEG_PATH variable:

export FFMPEG_PATH=/path/to/ffmpeg-4.4-amd64-static3.4. Download Pretrained Weights:

Use Git LFS to manage large files:

git lfs install

git clone https://huggingface.co/BadToBest/EchoMimicV2 pretrained_weightsThe pretrained_weights directory will have the following structure:

./pretrained_weights/

├── denoising_unet.pth

├── reference_unet.pth

├── motion_module.pth

├── pose_encoder.pth

├── sd-vae-ft-mse

│ └── ...

└── audio_processor

└── tiny.ptThese are the core components for your AI avatar creation platform.

4. Running the Platform

Now that everything is set up, let’s look at running the code.

4.1. Gradio Demo

To launch the Gradio demo, run:

python app.py4.2. Python Inference Script

To run a python inference, run this command:

python infer.py --config='./configs/prompts/infer.yaml'4.3. Accelerated Inference

For faster results, use the accelerated version by adjusting the configuration:

python infer_acc.py --config='./configs/prompts/infer_acc.yaml'5. Preparing and Processing the EMTD Dataset

EchoMimicV2 offers a dataset for testing half-body animation. Here is how to download, slice, and preprocess it:

python ./EMTD_dataset/download.py

bash ./EMTD_dataset/slice.sh

python ./EMTD_dataset/preprocess.pyDiving Deeper: Customization & Advanced Features

With the base system set up, explore the customization opportunities that will make your Free AI Avatar Creation Platform stand out:

- Adjusting Training Parameters: Experiment with parameters like learning rates, batch sizes, and the duration of various training phases to optimize performance and tailor your platform to specific needs.

- Integrating Custom Datasets: Train the model with your own datasets of reference images, audios, and poses to create avatars with your specific look, voice, and behavior.

- Refining Animation Quality: Use different phases of PhD Loss for the quality of motion, detail and low visual level.

Building a Free AI Avatar Creation Platform is a challenging yet achievable task. This post provided the first step in achieving this goal by focusing on the EchoMimicV2 framework. Its innovative approach simplifies the control of animated avatars and offers a solid foundation for further improvements and customization. By leveraging its Audio-Pose Dynamic Harmonization, Head Partial Attention and the Phase-Specific Denoising Loss you can create a truly captivating and free avatar creation experience for your audience.