ECL vs RAG: A Deep Dive into Two Innovative AI Approaches

In the world of advanced AI, particularly with large language models (LLMs), two innovative approaches stand out: the External Continual Learner (ECL) and Retrieval-Augmented Generation (RAG). While both aim to enhance the capabilities of AI models, they serve different purposes and use distinct mechanisms. Understanding the nuances of ECL vs RAG is essential for choosing the right method for your specific needs.

What is an External Continual Learner (ECL)?

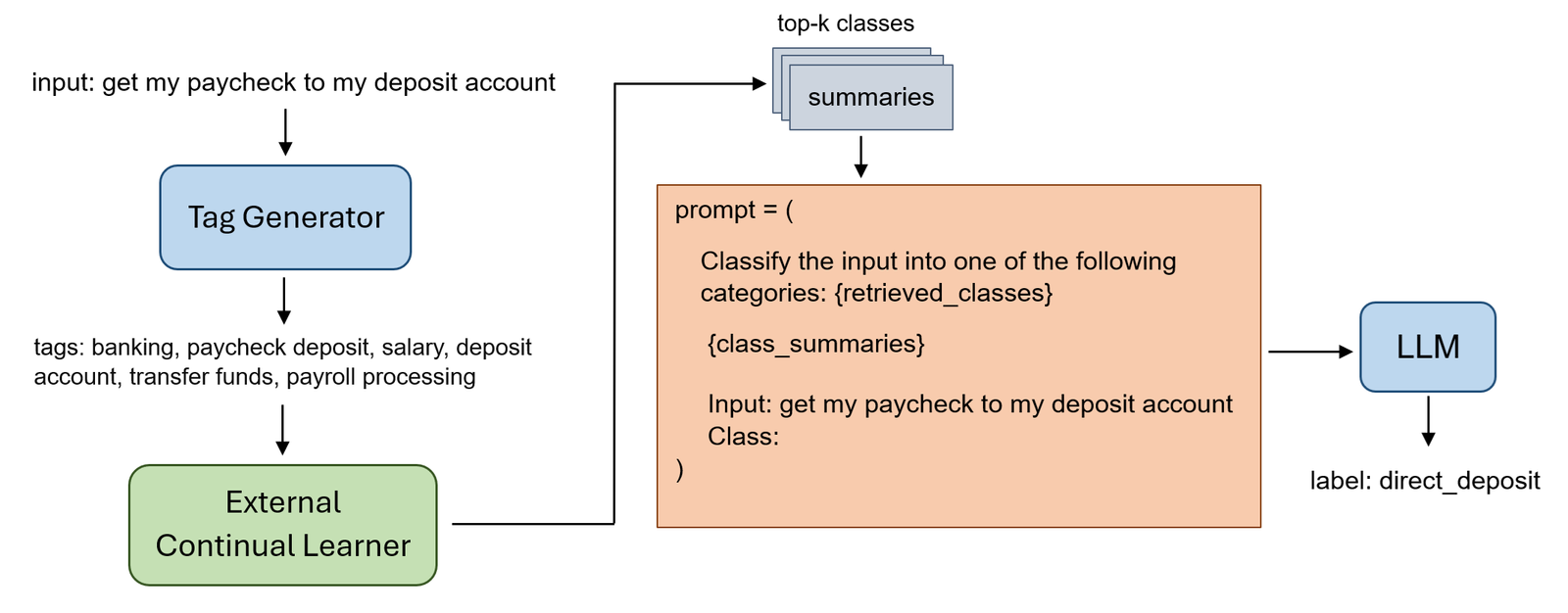

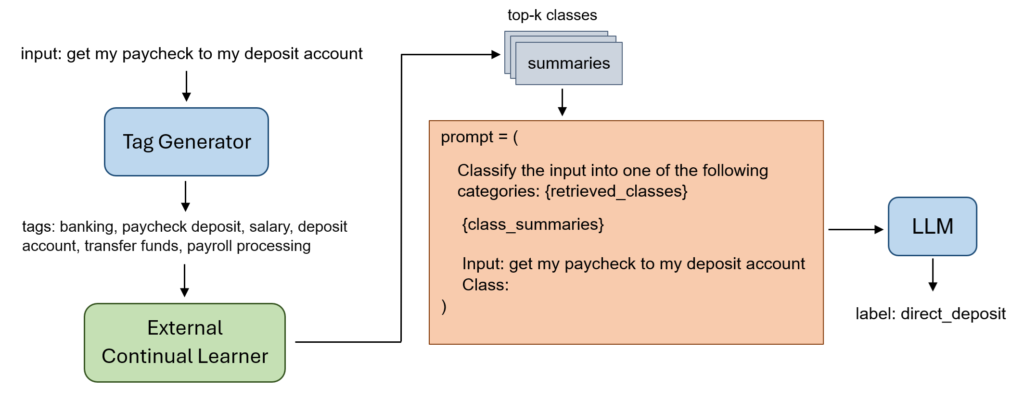

An External Continual Learner (ECL) is a method designed to assist large language models (LLMs) in incremental learning without suffering from catastrophic forgetting. The ECL functions as an external module that intelligently selects relevant information for each new input, ensuring that the LLM can learn new tasks without losing its previously acquired knowledge.

The core features of the ECL include:

- Incremental Learning: The ability to learn continuously without forgetting past knowledge.

- Tag Generation: Using the LLM to generate descriptive tags for input text.

- Gaussian Class Representation: Representing each class with a statistical distribution of its tag embeddings.

- Mahalanobis Distance Scoring: Selecting the most relevant classes for each input using distance calculations.

The goal of the ECL is to streamline the in-context learning (ICL) process by reducing the number of relevant examples that need to be included in the prompt, addressing scalability issues.

What is Retrieval-Augmented Generation (RAG)?

Retrieval-Augmented Generation (RAG) is a framework that enhances the performance of large language models by providing them with external information during the generation process. Instead of relying solely on their pre-trained knowledge, RAG models access a knowledge base and retrieve relevant snippets of information to inform the generation.

The key aspects of RAG include:

- External Knowledge Retrieval: Accessing an external repository (e.g., a database or document collection) for relevant information.

- Contextual Augmentation: Using the retrieved information to enhance the input given to the LLM.

- Generation Phase: The LLM generates text based on the augmented input.

- Focus on Content: RAG aims to add domain-specific or real-time knowledge to content generation.

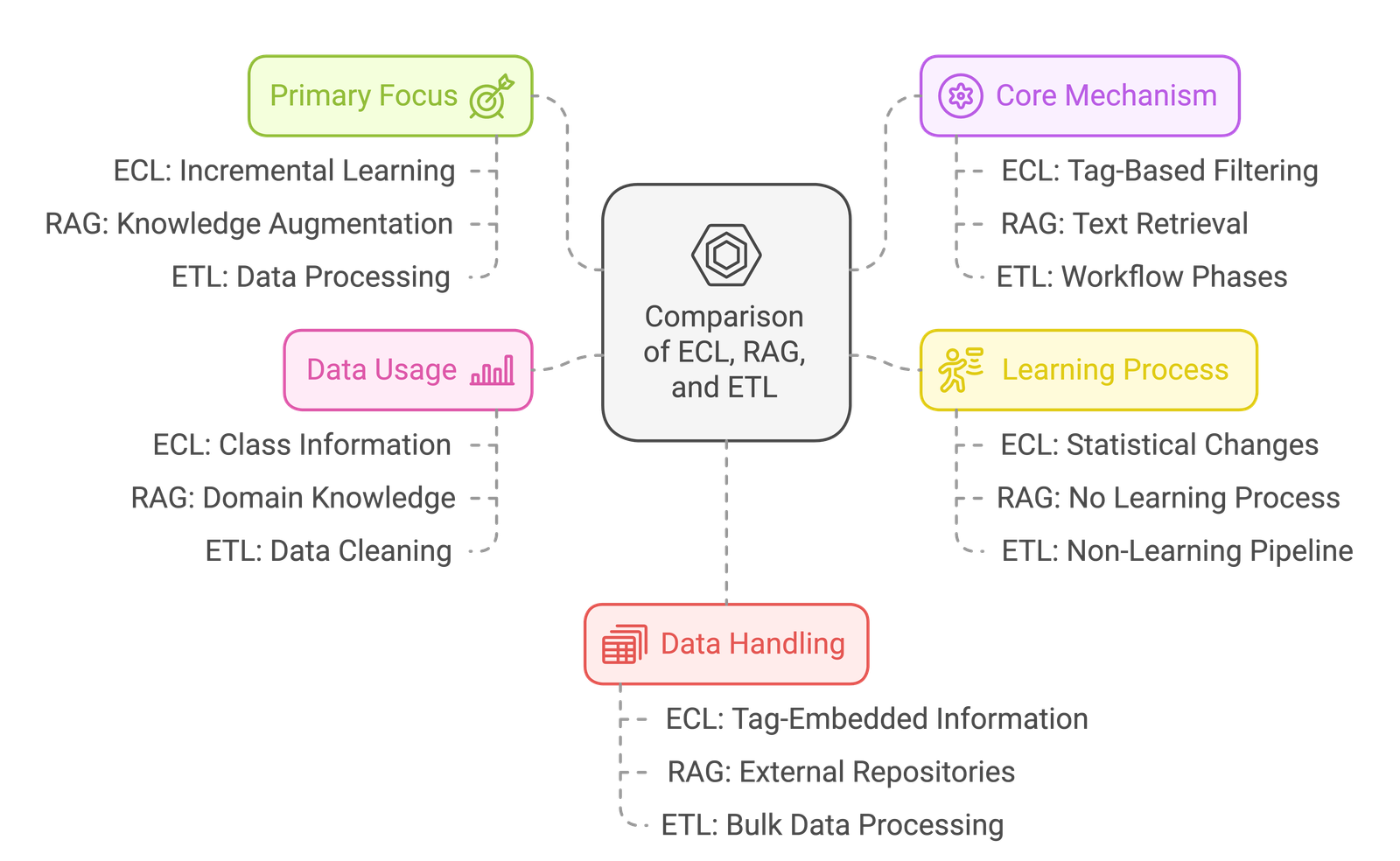

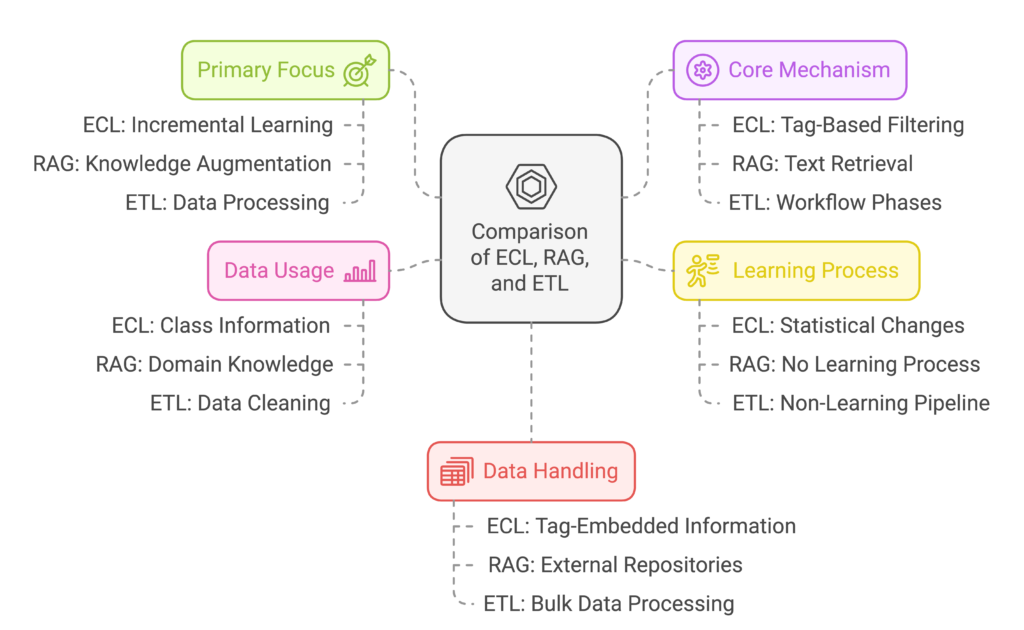

Key Differences: ECL vs RAG

While both ECL and RAG aim to enhance LLMs, their fundamental approaches differ. Here’s a breakdown of the key distinctions between ECL vs RAG:

- Purpose: The ECL is focused on enabling continual learning and preventing forgetting, while RAG is centered around providing external knowledge for enhanced generation.

- Method of Information Use: The ECL filters context to select relevant classes for an in-context learning prompt, using statistical measures. RAG retrieves specific text snippets from an external source and uses that for text generation.

- Learning Mechanism: The ECL learns class statistics incrementally and does not store training instances to deal with CF and ICS. RAG does not directly learn from external data but retrieves and uses it during the generation process.

- Scalability and Efficiency: The ECL focuses on managing the context length of the prompt, making ICL scalable. RAG adds extra steps in content retrieval and processing, which can be less efficient and more computationally demanding.

- Application: ECL is well-suited for class-incremental learning, where the goal is to learn a sequence of classification tasks. RAG excels in scenarios that require up-to-date information or context from an external knowledge base.

- Text Retrieval vs Tag-based Classification: RAG uses text-based similarity search to find similar instances, whereas the ECL uses tag embeddings to classify and determine class similarity.

When to Use ECL vs RAG

The choice between ECL and RAG depends on the specific problem you are trying to solve.

- Choose ECL when:

- You need to train a classifier with class-incremental learning.

- You want to avoid catastrophic forgetting and improve scalability in ICL settings.

- Your task requires focus on relevant class information from past experiences.

- Choose RAG when:

- You need to incorporate external knowledge into the output of LLMs.

- You are working with information that is not present in the model’s pre-training.

- The aim is to provide up-to-date information or domain-specific context for text generation.

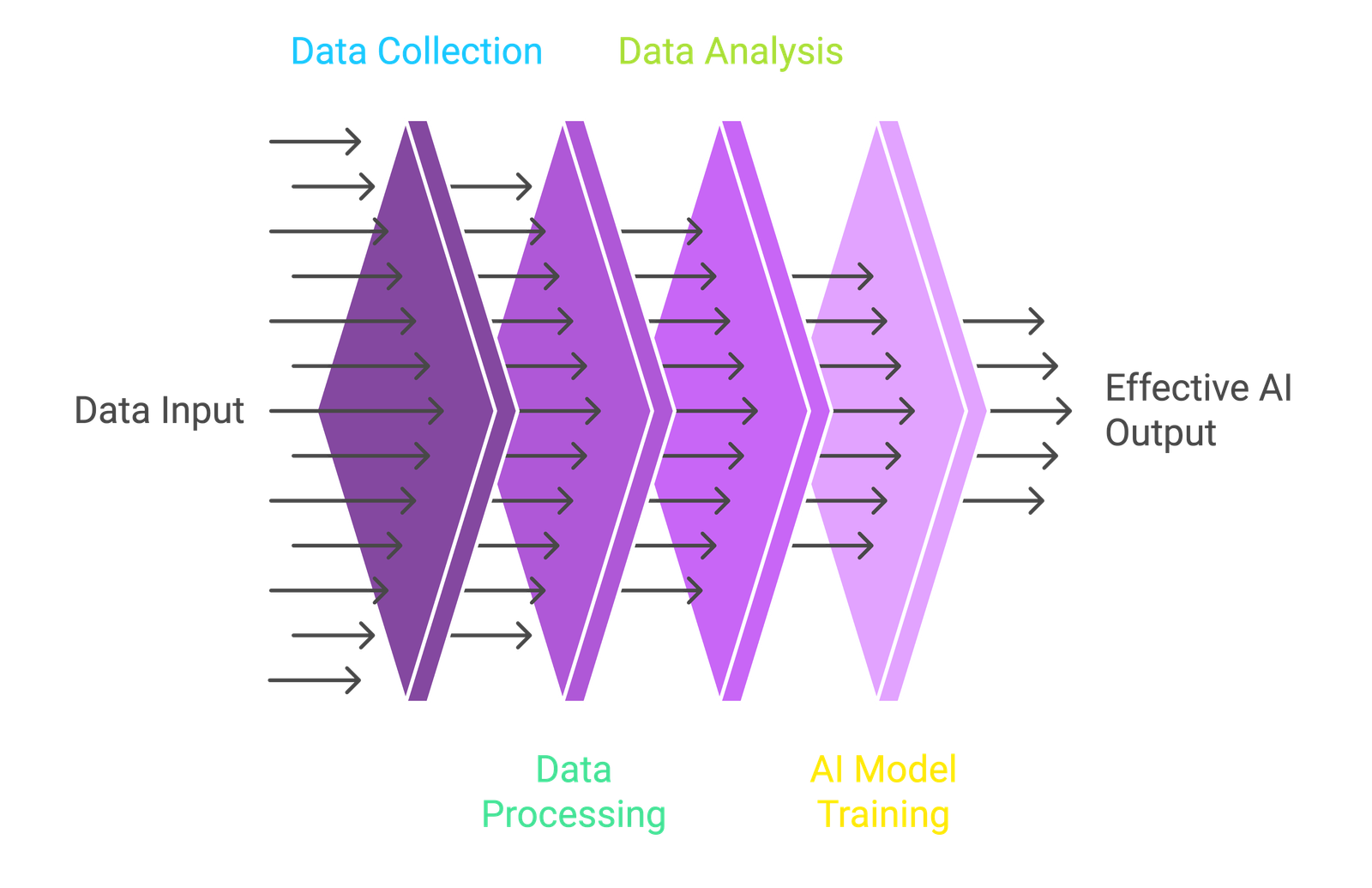

What is ETL? A Simple Explanation of Extract, Transform, Load

In the realm of data management, ETL stands for Extract, Transform, Load. It’s a fundamental process used to integrate data from multiple sources into a unified, centralized repository, such as a data warehouse or data lake. Understanding what is ETL is crucial for anyone working with data, as it forms the backbone of data warehousing and business intelligence (BI) systems.

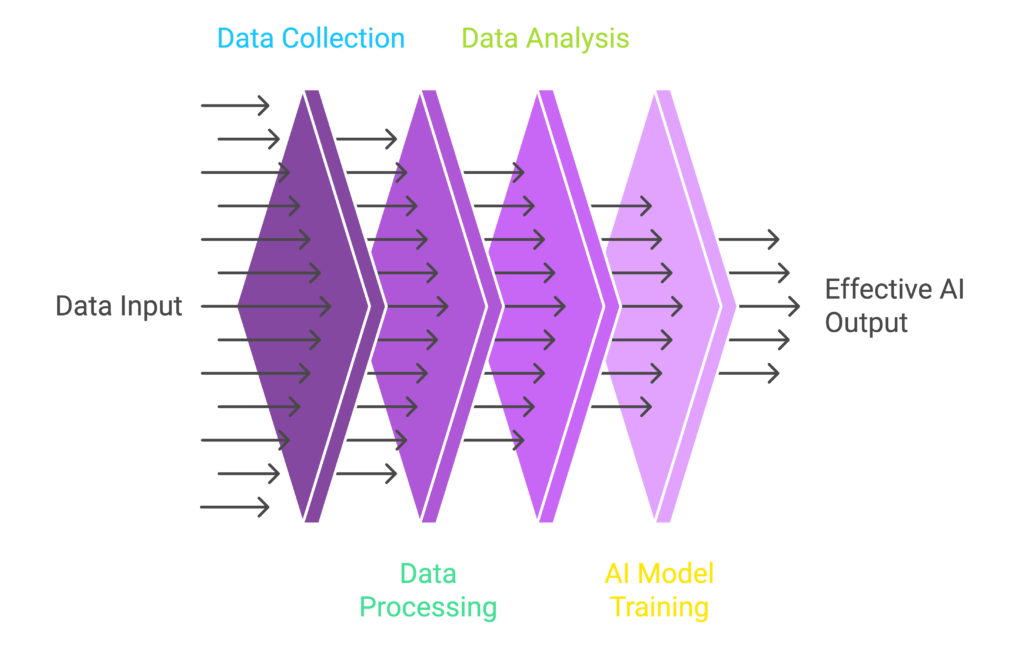

Breaking Down the ETL Process

The ETL process involves three main stages: Extract, Transform, and Load. Let’s explore each of these steps in detail:

1. Extract

The extract stage is the initial step in the ETL process, where data is gathered from various sources. These sources can be diverse, including:

- Relational Databases: Such as MySQL, PostgreSQL, Oracle, and SQL Server.

- NoSQL Databases: Like MongoDB, Cassandra, and Couchbase.

- APIs: Data extracted from various applications or platforms via their APIs.

- Flat Files: Data from CSV, TXT, JSON, and XML files.

- Cloud Services: Data sources like AWS, Google Cloud, and Azure platforms.

During the extract stage, the ETL tool reads data from these sources, ensuring all required data is captured while minimizing the impact on the source system’s performance. This data is often pulled in its raw format.

2. Transform

The transform stage is where the extracted data is cleaned, processed, and converted into a format that is suitable for the target system. The data is transformed and prepared for analysis. This stage often involves various tasks:

- Data Cleaning: Removing or correcting errors, inconsistencies, duplicates, and incomplete data.

- Data Standardization: Converting data to a common format (e.g., date and time, units of measure) for consistency.

- Data Mapping: Ensuring that the data fields from source systems correspond correctly to fields in the target system.

- Data Aggregation: Combining data to provide summary views and derived calculations.

- Data Enrichment: Enhancing the data with additional information from other sources.

- Data Filtering: Removing unnecessary data based on specific rules.

- Data Validation: Ensuring that the data conforms to predefined business rules and constraints.

The transformation process is crucial for ensuring the quality, reliability, and consistency of the data.

3. Load

The load stage is the final step, where the transformed data is written into the target system. This target can be a:

- Data Warehouse: A central repository for large amounts of structured data.

- Data Lake: A repository for storing both structured and unstructured data in its raw format.

- Relational Databases: Where processed data will be used for reporting and analysis.

- Specific Application Systems: Data used by business applications for various purposes.

The load process can involve a full load, which loads all data, or an incremental load, which loads only the changes since the last load. The goal is to ensure data is written efficiently and accurately.

Why is ETL Important?

The ETL process is critical for several reasons:

- Data Consolidation: It brings together data from different sources into a unified view, breaking down data silos.

- Data Quality: By cleaning, standardizing, and validating data, ETL enhances the reliability and accuracy of the information.

- Data Preparation: It transforms the raw data to be analysis ready, making it usable for reporting and business intelligence.

- Data Accessibility: ETL makes data accessible and actionable, allowing organizations to gain insights and make data-driven decisions.

- Improved Efficiency: By automating data integration, ETL saves time and resources while reducing the risk of human errors.

When to use ETL?

The ETL process is particularly useful for organizations that:

- Handle a diverse range of data from various sources.

- Require high-quality, consistent, and reliable data.

- Need to create data warehouses or data lakes.

- Use data to enable Business Intelligence or data driven decision making.

ECL vs RAG

| Feature | ECL (External Continual Learner) | RAG (Retrieval-Augmented Generation) |

| Purpose | Incremental learning, prevent forgetting | Enhanced text generation via external knowledge |

| Method | Tag-based filtering and statistical selection of relevant classes | Text-based retrieval of relevant information from an external source |

| Learning | Incremental statistical learning; no LLM parameter update. | No learning; rather, retrieval of external information. |

| Data Handling | Uses tagged data to optimize prompts. | Uses text queries to retrieve from external knowledge bases |

| Focus | Managing prompt size for effective ICL. | Augmenting text generation with external knowledge |

| Parameter Updates | External module parameters updated; no LLM parameter update. | No parameter updates at all. |

ETL vs RAG

| Feature | ETL (Extract, Transform, Load) | RAG (Retrieval-Augmented Generation) |

| Purpose | Data migration, transformation, and preparation | Enhanced text generation via external knowledge |

| Method | Data extraction, transformation, and loading. | Text-based retrieval of relevant information from an external source |

| Learning | No machine learning; a data processing pipeline. | No learning; rather, retrieval of external information. |

| Data Handling | Works with bulk data at rest. | Utilizes text-based queries for dynamic data retrieval. |

| Focus | Preparing data for storage or analytics. | Augmenting text generation with external knowledge |

| Parameter Updates | No parameter update; rules are predefined | No parameter updates at all. |

The terms ECL, RAG, and ETL represent distinct but important approaches in AI and data management. The External Continual Learner (ECL) helps LLMs to learn incrementally. Retrieval-Augmented Generation (RAG) enhances text generation with external knowledge. ETL is a data management process for data migration and preparation. A clear understanding of ECL vs RAG vs ETL allows developers and data professionals to select the right tools for the right tasks. By understanding these core differences, you can effectively enhance your AI capabilities and optimize your data management workflows, thereby improving project outcomes.